Xiaoyong Shen1 Li Xu2 Qi Zhang1 Jiaya Jia1

1The Chinese Univeristy of Hong Kong 2Image & Visual Computing Lab, Lenovo R&T

|

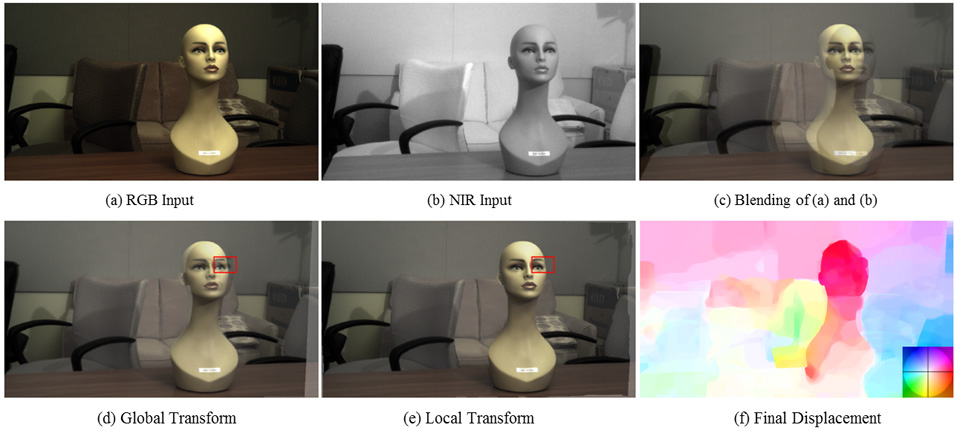

Overview of our multi-modal and multi-spectral registration framework. Given image pairs in (a) and (b), our method can get the high-quality registration result in (e). The displacement field is shown in (f). |

Abstract

Images now come in different forms – color, near-infrared, depth, etc. – due to the development of special and powerful cameras in computer vision and computational photography. Their cross-modal correspondence establishment is however left behind. We address this challenging dense matching problem considering structure variation possibly existing in these image sets and introduce new model and solution. Our main contribution includes designing the descriptor named robust selective normalized cross correlation (RSNCC) to establish dense pixel correspondence in input images and proposing its mathematical parameterization to make optimization tractable. A computationally robust framework including global and local matching phases is also established. We build a multi-modal dataset including natural images with labeled sparse correspondence. Our method will benefit image and vision applications that require accurate image alignment.

Results

| Multi-modal and Multi-spectral Registration |

|

|---|---|

|

|

| RGB Input | NIR Image |

|

|

| Click buttons to show results made by different algorithms | |

| More results and applications can be found in our supplementary file here. |

|

|---|---|

Related Project

Downloads

|

"Multi-modal and Multi-spectral Registration for Natural Images" Xiaoyong Shen, Li Xu, Qi Zhang, Jiaya Jia European Conference on Computer Vision(ECCV), 2014 |

References

[1] Hermosillo, G., Chefd'Hotel, C., Faugeras, O.D.: Variational methods for multimodal image matching. IJCV 50(3), 329–343 (2002).

[2] Liu, C., Yuen, J., Torralba, A., Sivic, J., Freeman, W.T.: Sift flow: Dense correspondence across different scenes. In ECCV. pp. 28–42 (2008).