On-going R&D Projects

R&D Projects in VR 2007 (.pdf)

Video Demos

Image-based modeling & rendering

Real-time shadow rendering of dynamic scenes

![]() Augmented image/video processing

Augmented image/video processing

•Texture synthesis is capable of producing similar textures exhibiting continuous changes in orientations and scales from simple samples. Dynamic textures represent such textured surfaces with repetitive, time-varying visual patterns that exhibit stationarity properties. We are working on modeling the textured smooth variations of orientation/scale in arbitrary 3D surfaces guided by user input, stretch-based mesh parametrization for perspective foreshortening and large distortion, and Poisson-based refinement for distortion at fine scale. Local and global optimizations are designed for accurate and smooth motion info. of dynamic objects

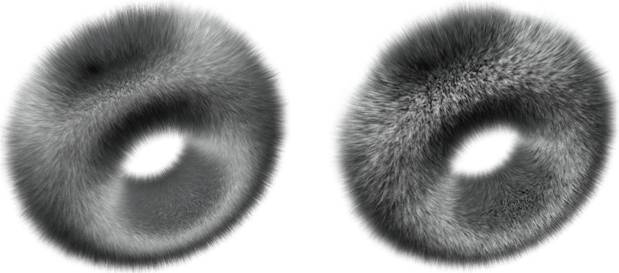

![]() Dynamic texture synthesis

Dynamic texture synthesis

Our approach is to synthesize dynamic color textures (DCT) by compressed the UV channels to reduce the size of matrix computation and memory space, while preserving the better visual quality of dynamic textures as well the similar dynamic behavior as for the original input. We separate the motions into different scales or frequencies, and the dynamic feature spaces are analyzed in modeling then mapped to a global coordinate system to preserve spatial continuity. We perform the comparative study on different multi-resolution descriptors (LP, HW, SP) to improve the DCT synthesis results.

Video demo (manipulative DCTs)

![]() Interactive Soft-touch Deformable Modeling

Interactive Soft-touch Deformable Modeling

Haptics investigates the touch-based interfaces supporting the force/tactile feedbacks to users. It is the active research field to improve the immersive reality of virtual environments, especially in simulations in which it is crucial for the operators to touch, grasp and manipulate objects in the virtual worlds. Haptic simulation of deformable objects needs to estimate not only the amount of object deformation but also the interaction forces that are reflected to users via haptic device. The focus of our work is to establish a novel soft-touch haptic modeling of dynamic objects based on metaball constraints. The haptic-constraint tools are first attracted to the target position and direction on the object, and then control the effective deformation of object within constrained local areas.

![]() Advanced VR-based Tools in Surgical Simulation

Advanced VR-based Tools in Surgical Simulation

Interactive computer-based simulation is gaining acceptance for complex surgical planning. This project studies the dexterous and flexible hand interfaces in manipulating medical objects (3D volumetric data, camera navigation, segmented anatomy organs), which are typically applied in real surgical procedures. Our goal is to develop a set of advanced knowledge-based virtual tools within all aspects of the virtual environment, these include two-handed interfaces, feature extraction and icon representation, image-guided surgery, interactive navigation within a patient's anatomic structure, tactile/auditory feedbacks, and constraint-based surgical simulation. The advanced virtual tools to be developed will integrate the newest visualization and virtual reality technologies to achieve more accurate diagnosis and enhanced surgical capabilities.

![]() Image-based Rendering with Geometric Information

Image-based Rendering with Geometric Information

Image-based rendering uses a series of reference images (or environment maps) to describe the scene from some fixed viewpoints. The images viewed from arbitrary viewpoints are then generated in real time by warping and combining these reference images. However, image-based rendering requires a vast amount of storage and cannot deal with dynamic scenes. This project investigates the methods for constructing the hybrid VR systems, which contains both geometric- and image-based models (e.g. to map image-based rendered images on geometric objects, keep the depth information in the textures, use adaptive texture mapping). The approach enables constant rendering time regardless of geometry and illumination complexity. Since both alpha blending and texture mapping operations are supported by graphics hardware, the approach is able to speedup the rendering process.

![]() Wavelet-based Multiresolution Modeling of Surfaces

Wavelet-based Multiresolution Modeling of Surfaces

It is most important to develop the new adaptive wavelet constructions of geometric models with high fitting qualities, while keeping the efficiency of them. In our study, we have proposed the subdivision-based biorthogonal wavelets and their analysis for both closed and open triangular meshes, with the subdivision connectivity. The multiresolution analysis based on the subdivision is more balanced than the existing wavelet analyses, and offers more levels of detail for processing polygonal models. We have investigated the novel biorthogonal polar wavelets, which can generate more natural subdivision surfaces and avoid the ripples and saddle points where other subdivision may produce. We further study how to construct the matrix-valued subdivision wavelets, and propose the optimal interpolatory wavelets transform for multiresolution triangular meshes, especially for complex models. The subdivision-based wavelets take the capability of wavelets to represent signal details in multiresolution, to break the new ways for geometric modeling and displays.

Wavelet-based surface modeling (project poster)

- Haptics Sculpting in Virtual Volume Space

- Virtual & Augmented Parks in Hong Kong

- Interactive Deformable Modeling on GPUs

- Multi-touch Interactions of VR Medical Data

- Compact Video Synopsis for Online Briefing

- Web-based Interactive Telemedicine Systems

- Efficient Image/Video Retexturing on GPUs

- Interactive Simulation of Fluid Dynamics

- Mobile and Wireless Virtual Environments